เว็บหวยออนไลน์

จ่ายจริงราคาสูงส่วนลดเยอะต้องแทงหวยออนไลน์ โดยเรามีบริการแทงหวยออนไลน์ ฝาก-ถอน รวดเร็ว เว็บแทงหวยเปิดให้บริการนานกว่า 10 ปีจึงมั่นใจได้ถึงความปลอดภัยและมีมาตรฐานการบริการ 24 ชั่วโมง โดยเฉพาะนักเสี่ยงโชคส่วนไหญ่ที่ชื่นชอบ หวยออนไลน์ เราเป็นหนึ่งในผู้ให้บริการแทงหวยหุ้นที่ดีที่สุด มีหวย จากทุกประเทศ ออกผลตรงเวลา ไม่ต้องรอนาน ราคาจ่ายสูงที่สุด พร้อมโปรโมชั่นส่วนลดมากกว่าที่ไหนๆ เว็บแทงหวยจ่ายจริงต้องเราเท่านั้น 999LUCKY เว็บแทงหวยออนไลน์

|หวยปิงปอง|ลัคกี้พอยท์|หวยมาเลพารวย|หวยลาว|หวยจับยี่กี|เว็บหวยไหนดี|

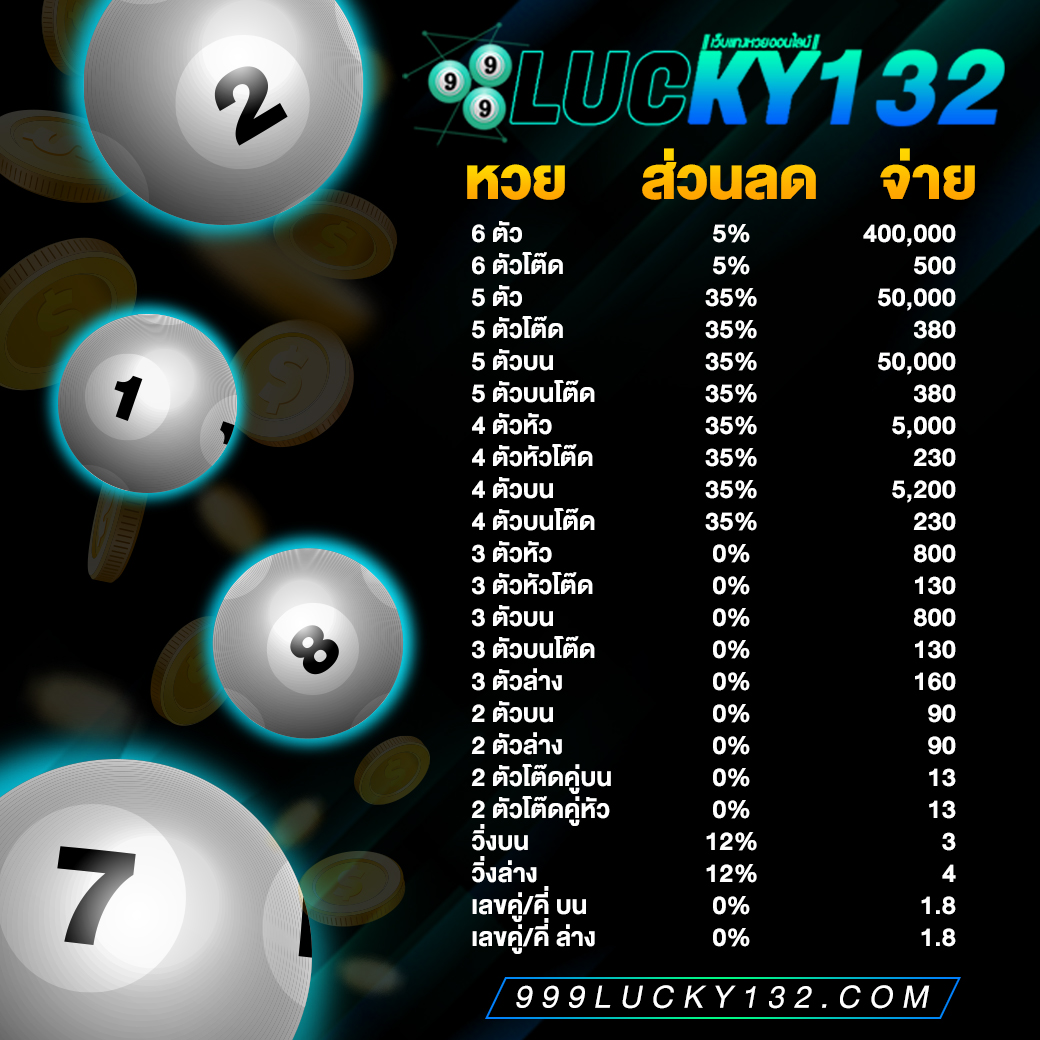

999lucky อัตราจ่าย

อีกหนึ่งวิธีที่จะทำให้ท่านแทงหวยแล้วได้กำไร นั้นก็คือการที่เลือกเว็บที่มีอัตราการจ่ายเงินรางวัลที่สูง เพราะแต่ละเว็บจะกำหนดอัตราการจ่ายเงินรางวัลไม่เท่ากัน แต่ก็ควรคำนึงถึงความเป็นไปได้ด้วยว่า เว็บที่ท่านเลือกได้ตั้งเงินรางวัลเกินจริงหรือไม่ มิฉะนั้นท่านอาจถูกหวยแล้วไม่ได้เงินก็เป็นได้

หากท่านกำลังมองหาเว็บแทงหวยที่มีอัตราการจ่ายเงินรางวัลสูง มั่นคง ปลอดภัย และไว้ใจได้ ให้999luckyดูแลเรื่องการเงินให้ท่านดีกว่า เว็บแทงหวยออนไลน์ เป็นเว็บที่มีความซื่อสัตย์ ซื่อตรง จ่ายจริง ไม่โกง ปลอดภัย100% และยังมีอัตราการจ่ายสูงสุดอยู่ที่ บาทละ800 หรือจะเลือกรับเป็นส่วนลดก็ได้มากถึง40%

เว็บแทงหวยออนไลน์999Lucky

เป็นเว็บที่เปิดให้บริการมายาวนาน เป็นเว็บแทงหวยที่ได้รับความไว้วางใจจากคอหวยชาวไทยทั้งประเทศ มีหวยให้ท่านได้เลือกเล่นอย่างจุใจ และยังสามารถเช็คผลหวยได้ฟรีที่หน้าเว็บอีกด้วย สมัครเลย ฝากง่าย ถอนไวใน 2 นาที ด้วยระบบอัตโนมัติ ไม่มีขั้นต่ำในการฝาก 1บาทก็เล่นได้ หวยออนไลน์ เว็บแทงหวยออนไลน์ที่ดีที่สุดในตอนนี้ อัตราจ่ายสูง การเงินมั่นคง ด้วยการให้บริการของเว็บ 999LUCKY ให้บริการมานานมากว่า 9 ปี จึงทำให้ได้รับความนิยมจากนักเสียงโชคส่วนมากพึงพอใจในการบริการของทางเว็บไซต์เป็นอย่างมาก ฝาก-ถอน รวดเร็ว ทันใจ

เปิดให้บริการ ฝาก-ถอน ภายใน 5 นาที การเงิน ปลอดภัย ระบบอัตโนมัติ100%

เว็บแทงหวยออนไลน์

สำหรับท่านใดที่กำลังมองหาเว็บหวยออไลน์เราขอแนะนำ 999LUCKY เว็บหวยออนไลน์ ที่จ่ายเยอะกว่าเว็บหวยอื่นๆ ไม่ว่าจะเป็น หวยลาว หวยฮานอย หวยยี่กี หวยเวียดนาม หวยรัฐบาลไทย หวยหุ้นไทย หวยหุ่นต่างประเทศ แลหวยอื่นๆอีกมากมาย หลากหลายรูปแบบการเล่นที่แตกต่างกันออกไป มีอัตราการจ่ายเงินที่สูงและพร้อม โปรโมชั่นตอนรับสมาชิกใหม่และร่วมเล่นกิจกรรมลุ้นรับของรางวัลกันทุกวัน !!

999LUCKY หวยออนไลน์ | เปิดบริการ ฝาก-ถอน ตลอด 24 ชม.